Developing an AI for Tablut Using the Mini Max Algorithm and Alpha Pruning

22 Dec 2019A few weeks ago, for CS 61B, we had a project where we were supposed to recreate the game Tablut. For part of the process, we had to develop an AI for both the black and white sides in the game. The week prior to that we learned about the Minimax algorithm which is very beautiful and powerful despite its idea being quite simple.

In this blog post, I’ll explain how I built an AI for the Tablut game that uses the minimax algorithm as well as explains the use cases and efficiency of the algorithm.

What is Tablut?

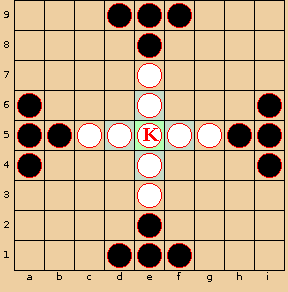

Tablut is an ancient Nordic and Celtic board game that starts with this layout:

The basic gist of the game is that both white and black pieces can move any distances within the board orthogonally as long as there are no blockers in the way (similar to a knight piece in chess). For the white to win, the white side has to move the king to the end of any of the edges. For the black to win, the black side has to capture the king. Both black and white pieces can capture the other piece if 2 of their pieces sandwiches the opponent’s piece. A capture results in the sandwiched piece being removed.

For more info and additional rules, check out: http://inst.eecs.berkeley.edu/~cs61b/fa19/materials/proj/proj2/index.html

What is the Minimax Algorithm?

For part of the Tablut game, we were tasked to create an AI. A good first start would be by using the Minimax algorithm!

Basically, the Minimax algorithm anticipates a move for a player based on the best possible move for each player after making a certain move. Another way to say this is that this algorithm minimizes “the possible loss for a worst case (maximum loss) scenario”.

In general, to use the Minimax algorithm, we do the following:

- Create a heuristic function that takes in an input (ex: the board) and evaluates how valuable a move it. For example, we could say that the lower the value, the better the value is for one side; the higher the value, the better the value is for the other side.

- Construct a Game Tree which is a visualization of possible moves for a game.

- Each edge represents a move and each node represents the value after the moves from all the nodes from the bottom.

- For example, let’s say we have the game tree as follows where the squares represent the heuristic score after the opponents move and the circles represent the heuristic score after our move. The higher scores favor us and lower scores favor the opponent:

We start from the top which is going to be our move (the node value is unset for the moment). Then, the next layer represents all the possible moves stemming from our move. We calculate what possible responses there are to the move on the top. We keep traversing all the way to the bottom. The bottom layer represents all the possible moves for our current board. The layer above that represents all the possible responses to our moves. Then, we statically generate the score for each of the node values for the bottom and we follow the Minimax algorithm. At the bottom level node values at first (0, 5, -4, 1, -3, 3, 0). At the bottom depth, we select based on what is optimal for the player. So say we are at depth 2, with the circles, we select 5, 1, 3, and 6 since those are the maximal values of the children. At depth 1, we assume the opponent will select the minimal values of the children so 1 and 3. Finally, we are at depth 0 and we select the maximal value of the children.

Here is a gif which may be more intuitive:

We start from the top which is going to be our move (the node value is unset for the moment). Then, the next layer represents all the possible moves stemming from our move. We calculate what possible responses there are to the move on the top. We keep traversing all the way to the bottom. The bottom layer represents all the possible moves for our current board. The layer above that represents all the possible responses to our moves. Then, we statically generate the score for each of the node values for the bottom and we follow the Minimax algorithm. At the bottom level node values at first (0, 5, -4, 1, -3, 3, 0). At the bottom depth, we select based on what is optimal for the player. So say we are at depth 2, with the circles, we select 5, 1, 3, and 6 since those are the maximal values of the children. At depth 1, we assume the opponent will select the minimal values of the children so 1 and 3. Finally, we are at depth 0 and we select the maximal value of the children.

Here is a gif which may be more intuitive:  .

Also, here is a good visualization of how the Minimax algo works: https://www.youtube.com/watch?v=zDskcx8FStA.

.

Also, here is a good visualization of how the Minimax algo works: https://www.youtube.com/watch?v=zDskcx8FStA.

More specifically, for our case with Tablut, we will do the following:

- Create a heuristic function based on the board. Lower values mean that it’s beneficial to the black player while higher values mean that it’s beneficial to the white player. In our heuristic function we do the following:

- Check King’s surroundings. If the King is surrounded by black pieces, we lower the score since this indicates that the King is closer to being captured. If the King is close to the throne, raise the score since that means the King is more protected from captures.

- Check if the King is at the edge. If so, white has won and we set the score to infinity.

- Check if the King is captured correctly. If so, black has won and we set the score to negative infinity.

- Check the number of black pieces and white pieces (because some pieces could be captured). We add and subtract the number to our score. If there are fewer black pieces than white pieces, we raise the score. If there are fewer white pieces than black pieces, we lower the score.

- Create the game tree and recursively find the best move:

- If we meet either of our base cases, we return the heuristic score of the board. Our base cases are: 1) we have hit our recursive depth limit (which we arbitrarily set at 3) and 2) there is a winner based on the board layout.

- We start by finding all the possible moves given the current board layout.

- For each of the possible moves:

- We make the move.

- Given the board that has just changed from the move, we recursively call our function which is step (2) with this new board. The value of the recursive call to the function of step (2) is assigned to the variable responseScore.

- If the responseScore is the largest we’ve seen, we record the move to our private global variable _lastFoundMove.

- Return the best possible move for the AI (_lastFoundMove).

This is how it looks like in code (gist):

Optimizing our algorithm using Alpha and Beta Pruning

As you can see, running the Minimax algorithm will take quite a bit of time to run since we are going through each possible move and going through each response to each of those moves, etc.

One key observation we can make about the Minimax algorithm is that we can short circuit some of our decisions.

(Source: https://en.wikipedia.org/wiki/File:AB_pruning.svg)

Before we start, we assign alpha to negative infinity and beta to infinity. Alpha represents the minimum score that the player who is maximizing will get. Beta represents the maximum score that the player who is minimizing will get.

In the tree example above, we start after the initial values at the bottom are generated (5, 6, 7, 4, 5, 3, etc.). We proceed from left to right. We prune when alpha is greater than beta.

Thus, we proceed as follows:

- We start at depth 4 with the values 5 and 6.

- We evaluate the min(current minimum, current value) since we are trying to minimize it. Then, we will update the node above to the minimum value.

- At node 5, min(5, infinity) equals 5. Minimum = 5. We also check if alpha > beta (so 5 > infinity) to check if we need to prune the branches to the right.

- At node 6, we evaluate min(5, 6) is still 5. We also check if alpha > beta: 6 > infinity.

- We carry this value all the way up as we backtrack. So at depth 3, beta is set to 5. At depth 2, alpha is set to 5. We go back down to depth 3 and finish evaluating that subtree.

- We go to the next set of values at depth 4: 7, 4, 5

- Alpha is set to 5 at the moment since it inherits that value from the parent.

- At node 7, the current minimum is 7. Alpha is still < beta: 7 < 5.

- At node 4, we get min(7, 4) which is 4 so the current minimum is 4. Alpha is > beta: 5 > 4. So, we start pruning and we don’t consider node 5! Why don’t we care about any of the values to the right? We know that the parent node will be <= 4 (since we are minimizing) which is < 5. When we backtrack up to depth 3, we won’t care about this tree’s value since we already have the 5 value to the left which is > 4.

- We go to the next tree and follow a similar process. 3 trickles up to the node at depth 2 because at the bottom alpha < beta (3 < 5) and when we backtrack, alpha < beta.

- We continue this process until we get the top value.

- Note: the reason why we don’t traverse the tree with the node value of 8 at depth 2 is because alpha was greater than beta (6 > 5) in the tree to the left of it.

In the gist above, I’ve incorporated alpha and beta pruning.

What’s next?

There are many other algorithms that could work better for Tablut. I chose Minimax because it seems to be the most common approach for building AIs for chess (https://stackoverflow.com/a/2026339/4698963, https://www.quora.com/Is-there-a-better-game-algorithm-than-Minimax-for-playing-chess) and was a good algorithm to learn at first for game decisions. I’m still a newbie when it comes to AIs in games but I plan to explore the Monte Carlo Tree Search.

Sources

http://inst.eecs.berkeley.edu/~cs61b/fa19/materials/lectures/lect22.pdf http://inst.eecs.berkeley.edu/~cs61b/fa19/materials/book2/data-structures.pdf